#TTS #Eleven Labs #AWS

Unlocking Faster Reasoning with o1-mini: A Look at OpenAI’s Small but Mighty Model

As the AI landscape continues to evolve, developers and businesses seek the perfect balance of speed, cost-efficiency, and capability. Enter o1-mini, OpenAI’s small reasoning model that boasts lightning-fast processing especially when it comes to coding and math without compromising on quality. Below, we’ll explore what o1-mini brings to the table, its pricing structure, and how it compares to other fine-tuning options in the market.

1. Why Choose o1-mini?

A. Faster Reasoning for Math and Code

While the larger o1 model excels at in-depth reasoning, o1-mini targets scenarios where you need quicker responses. If your use case leans heavily on coding tasks like debugging, generating snippets, or reviewing scripts or math-heavy workflows (from problem-solving to formula generation), o1-mini’s optimization can make a noticeable difference.

B. Cost-Effective Without Sacrificing Quality

o1-mini offers a cheaper entry point than many high-end LLMs (Large Language Models). Despite a smaller size, it strikes a balance of speed and reasoning power, making it ideal for startups or teams mindful of budget constraints.

C. Fits Seamlessly into Existing Workflows

With structured output support (inherited from the broader o1 family) and the ability to integrate tools, o1-mini slots easily into CI/CD pipelines, coding assistants, or other automated processes requiring real-time feedback.

2. o1-mini Pricing: At a Glance

| Model | Input Tokens | Cached Input Tokens* | Output Tokens |

|---|---|---|---|

| o1-mini | $3.00 / 1M | $1.50 / 1M | $12.00 / 1M |

| o1-mini-2024-09-12 | $3.00 / 1M | $1.50 / 1M | $12.00 / 1M |

Key Points

- 50% Discount on Cached Prompts: Repeated text within your requests is billed at half the normal input token rate.

- Output Token Costs: At $12.00 per 1M tokens, watch your output length if you want to keep a tight rein on expenses.

- Internal Reasoning Tokens: Output tokens include the internal “chain-of-thought” the model generates (though not visible in the final response), influencing how quickly your costs can add up.

Ideal Use Cases

- Frequent Code Generation: From automated code reviews to snippet generation, where speed is crucial.

- Quick Math Evaluations: Complex calculations or formula parsing that don’t require the context window of a larger model.

- Short-Form QA Systems: Chatbots or interactive tools where response speed trumps advanced, lengthy reasoning.

3. Fine-Tuning Your Model: Why and How

While o1-mini offers robust out-of-the-box capabilities, sometimes you need a custom flair—say, domain-specific jargon or a unique workflow. This is where fine-tuning comes in, allowing you to tailor any of OpenAI’s base models to your own data. You only pay for the tokens used during training and subsequent inference on that custom model.

A. Fine-Tuning Costs Across Models

| Model | Input Tokens | Batch / Cached Input Tokens | Output Tokens | Training Tokens |

|---|---|---|---|---|

| gpt-4o-2024-08-06 | $3.750 / 1M | $1.875 / 1M | $15.000 / 1M | $25.000 / 1M |

| gpt-4o-mini-2024-07-18 | $0.300 / 1M | $0.150 / 1M | $1.200 / 1M | $3.000 / 1M |

| gpt-3.5-turbo | $3.000 / 1M | $1.500 / 1M | $6.000 / 1M | $8.000 / 1M |

| davinci-002 | $12.000 / 1M | $6.000 / 1M | $12.000 / 1M | $6.000 / 1M |

| babbage-002 | $1.600 / 1M | $0.800 / 1M | $1.600 / 1M | $0.400 / 1M |

Important Notes

- Training Token Rate: You pay a separate rate for training tokens. After training, your inference costs revert to the base usage for your custom model.

- Batch API Discounts: If you can bundle multiple requests into one call, you can significantly lower your per-token costs.

- Cached Tokens: Repeated segments of text in your prompts are billed at a lower rate—helpful if you have standard instructions or format repeated for each request.

B. Why Fine-Tune o1-mini?

- Domain Customization

- If you’re using o1-mini for a specific field (like finance, law, or specialized technical jargon), training on your corpus can elevate its accuracy.

- Optimized Code Generation

- Train on your internal codebase or style guide so that the model consistently follows your coding conventions and patterns.

- Reduced Prompt Size

- By teaching the model your domain context upfront, you can shorten prompts during inference. This can also save on token costs in the long run.

4. Best Practices to Control Costs and Boost Performance

- Monitor Token Usage Religiously

- Both input and output tokens factor into your bill. Tools like built-in token counters or logging middleware can keep you informed.

- Leverage Batch API and Cached Tokens

- If your application sends repetitive prompts, group them. This allows you to get that sweet “Batch API” discount and the cached token rate.

- Shorten Output When Possible

- If you only need a concise answer, instruct the model to be brief. Every extra token can drive up expenses, especially at $12.00 per million for output.

- Experiment with Model Variants

- If o1-mini is too advanced or you need additional capabilities (e.g., bigger context, even faster speed), compare it to other GPT-4o or GPT-3.5 family models.

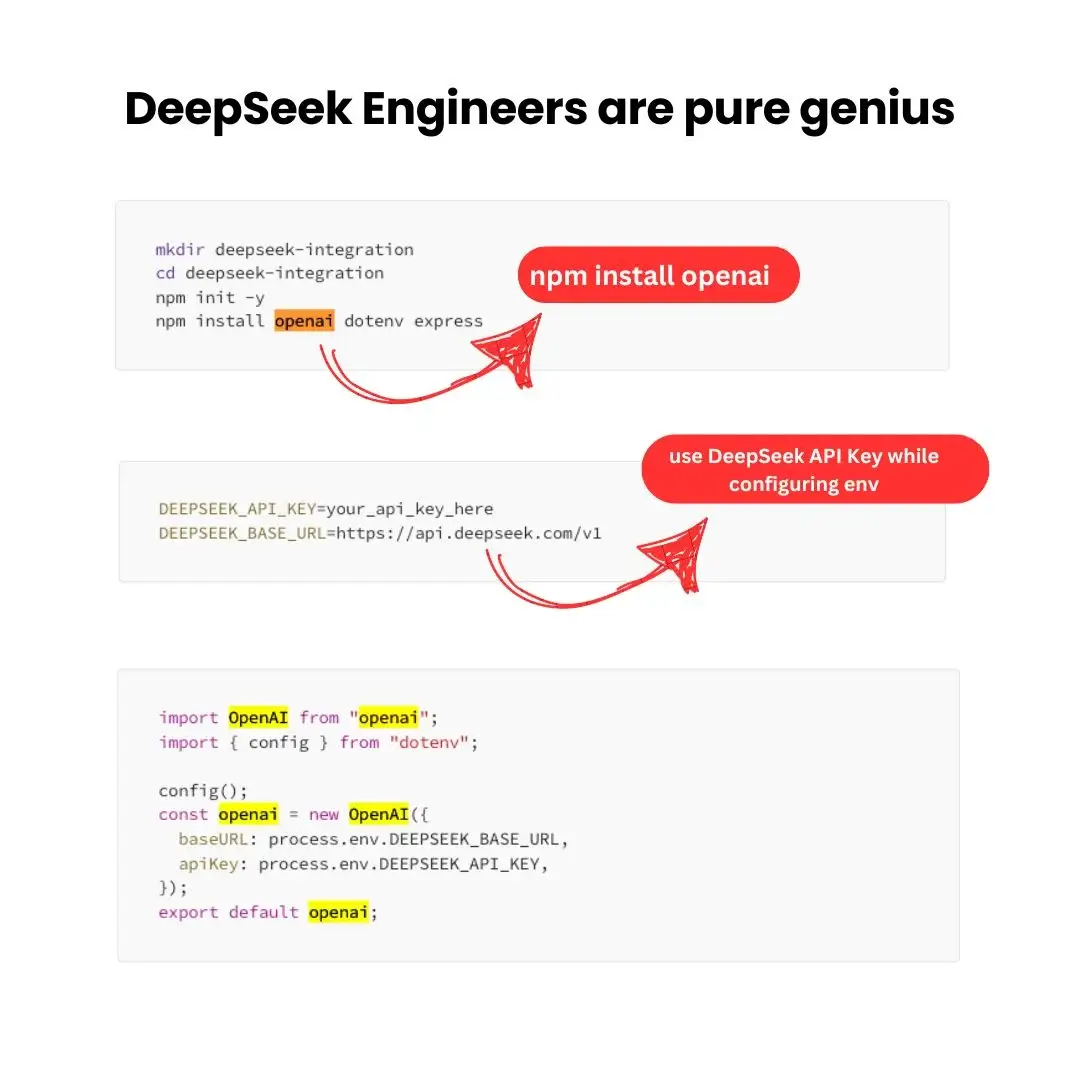

5. Integrating o1-mini into Your Workflow

A. Code-First Approach

Whether you’re using Python, Node.js, or another language, the integration process typically looks like this:

import { Configuration, OpenAIApi } from 'openai';

const configuration = new Configuration({

apiKey: process.env.OPENAI_API_KEY,

});

const openai = new OpenAIApi(configuration);

async function getO1MiniResponse(prompt) {

const response = await openai.createChatCompletion({

model: 'o1-mini',

messages: [{ role: 'user', content: prompt }],

});

return response.data.choices[0].message.content;

}Use environment variables to manage your API key securely and experiment with structured outputs where needed.

B. Building Real-Time Applications

- Chatbot: Combine with WebSockets or server-sent events for immediate feedback during user conversations.

- Math Solver or Coding Assistant: Pair with a front-end library (React, Vue, Angular) and let o1-mini handle requests asynchronously in the background.

6. Conclusion

With o1-mini, you don’t have to choose between fast performance and solid reasoning—you get both, optimized for coding and math tasks. Add in straightforward pricing, partial discounts via cached prompts, and seamless fine-tuning across the OpenAI ecosystem, and you’ve got a compelling option for many AI-driven applications.

Whether you’re a startup needing quick math solutions, a dev team automating code generation, or simply an innovator looking for a small but mighty AI to integrate into your product, o1-mini stands ready. And if you need a more specialized solution, fine-tuning offers a pathway to mold these models into a custom fit for your unique needs.

Keep in Mind

All pricing, features, and usage policies can change over time. For the most up-to-date information, always check OpenAI’s official documentation or announcements. And don’t forget to keep an eye on your token usage—cost optimization is key when experimenting with AI at scale.